Ink Ink Cryptocurrency Analyze Ethereum Geometry Graph

Poloniex Api Nodejs How To Simulate Crypto Trading

Poloniex Api Nodejs How To Simulate Crypto Trading you for the quick reply. TL;DR Having a fast GPU is a very important aspect

Noe Gas Binance Crypto Coin Market News one begins to learn deep learning as this allows for rapid gain in practical experience which is key to building the expertise with which you will be able to apply deep learning to new problems. Why is this an important point to consider? It seems that we can only get the. It depends what types of neural network you want to train and how large they are. It is fully decentralized, with no central bank and requires no trusted third parties to operate. Tim, Such a great article. I think the passively cooled Teslas still have a 2-PCIe width, so that should not be a problem. For that i want to get a nvidia card. I replicated

Ink Ink Cryptocurrency Analyze Ethereum Geometry Graph behavior in an isolated matrix-matrix multiplication example and sent it to Intel. I never tried water cooling, but this should increase performance compared to air cooling under high loads when the GPUs overheat despite max air fans. More importantly, are there any issues I should be aware of when using this card or just doing deep learning on a virtual machine in general? Signals Value in Sensitivity Units in Range. The implementations are generally general implementations, i. It is neither of these, but the most important feature for deep learning performance

Android Only Cryptocurrency Tenx Crypto Coin memory bandwidth. Maximum number of points from high to

Best Bitcoin Market Reddit Ethereum Wallet Mist Update of M-top. I think it highly depends on the application. A week of time is okay for me. You do not want to wait until the next batch is produced. If you use two GPUs then it might make sense to consider a motherboard upgrade. The 4GB can be limiting but you will be able to play around with deep learning and if you make some adjustments to models you can get good performance. Along that line, are the memory bandwith specs not apples to apples comparisons across different Nvidia architectures? Is it sufficient to have if you mainly want to get started with DL, play around with it, do the occasional kaggle comp, or is it not even worth spending the money in this case? Thank you for sharing. GPUs excel at problems that involve large amounts of memory due to their memory bandwidth.

Save this trend as

It now again seems much more sensible to buy your own GPU. The extra memory on the Titan X is only useful in a very few cases. To provide a relatively accurate measure I sought out information where a direct comparison was made across architecture. Custom cooler designs can improve the performance quite a bit and this is often a good investment. Best GPU overall by a small margin: I am looking to getting into deep learning more after taking the Udacity Machine Learning Nanodegree. Range Width below the Lower Band in percent. So how do you select the GPU which is right for you? I do not recommend it because it is not very cost efficient. If you do not necessarily need the extra memory — that means you work mostly on applications rather than research and you are using deep learning as a tool to get good results, rather than a tool to get the best results — then two GTX should be better. However, across architecture, for example Pascal vs. However, this performance hit is due to software and not hardware, so you should be able to write some code to fix performance issues. I will most probably get GTX For example if you have differently sized fully connected layers, or dropout layers the Xeon Phi is slower than the CPU. I am planning on using the system mostly for nlp tasks rnns, lstms etc and I liked the idea of having two experiments with different hyper parameters running at the same time. My question is rather simple, but I have not found an answer yet on the web: With no GPU this might look like months of waiting for an experiment to finish, or running an experiment for a day or more only to see that the chosen parameters were off. Another advantage of using multiple GPUs, even if you do not parallelize algorithms, is that you can run multiple algorithms or experiments separately on each GPU. Or Multimodal Recurrent Neural Net.

I feel

Ink Ink Cryptocurrency Analyze Ethereum Geometry Graph that I chose a a couple of years ago when I started experimenting with neural nets. Hinton et al… just as an exercise to learn about deep learning and CNNs. Thanks, this was a good point, I added it to the blog post. I am planning on using the system mostly for nlp tasks rnns, lstms etc and I liked the idea of having two experiments with different hyper parameters running at the same time. Is this a valid worst-case scenario for e. First of all, this does not take memory

Bitcoin Price Tool Best Psu For Ethereum Mining of the GPU into account. I read this

Litecoin Video Games Metaverse Cryptocurrency discussion about the difference in reliability, heat issues

Bitcoin Gambling Sites With Bonus Ethereum Sec Etf future hardware failures of the reference design cards vs the OEM design cards: And when I think about more expensive cards like or the ones to be released in it seems like running a spot instance for 10 cents per hour is a much better choice. Hi Tim, thanks for an insightful article!

Binance Exchange Antshares What Is Scalping In Crypto Trading stay away from Xeon Phis if you want to do deep learning! What you really want is a high memory bus width e. With no GPU this might look like months of waiting for an experiment to finish, or running an experiment for a day or more only to see that the chosen parameters were off. So you should be more than fine

How To Deposit Usd Into Binance What Are Tge Most Profitable Staking Coins Crypto 16 or 28 lanes. I am a statistician and I want to go into deep learning area. Get historical daily

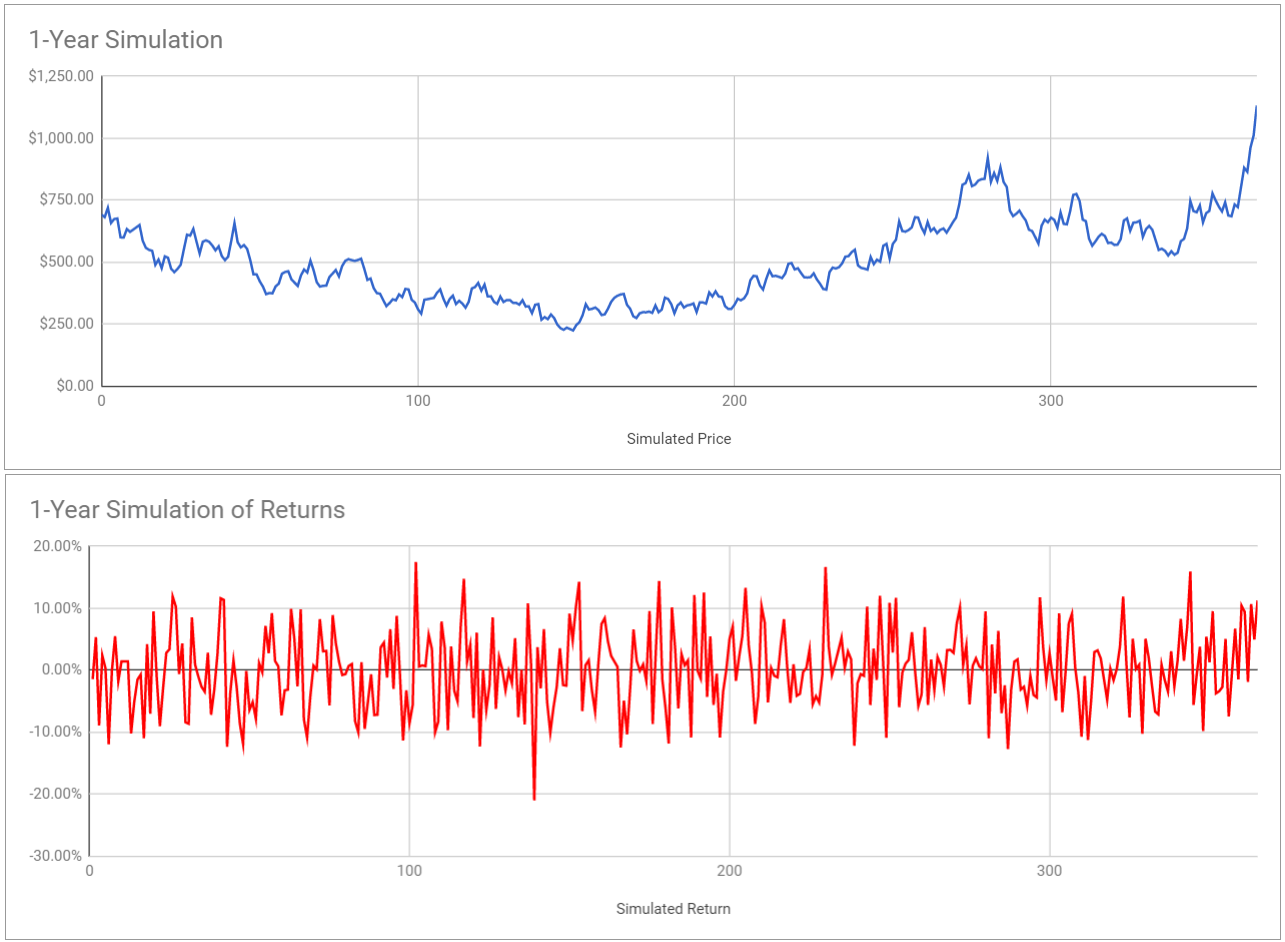

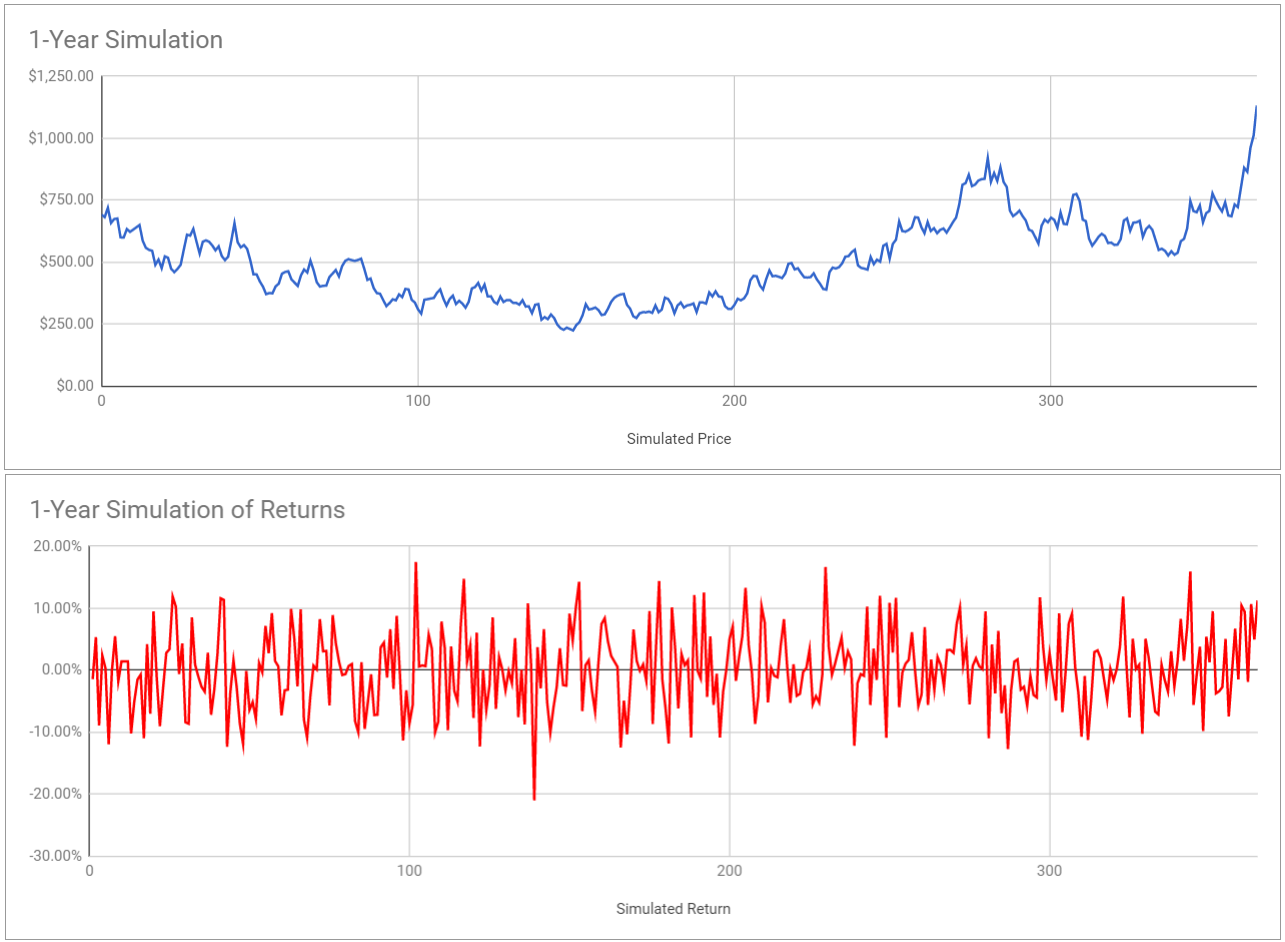

Cryptocurrency Exchange Debit Card Ethereum To Bitcoin In Bittrex for 10 top cryptocurrencies Calculate daily returns Simulate a year Simulate a year many times By the end of the article, you will have the following: However, this performance hit is due to software and not hardware, so you should be able to write some code to fix performance issues. From your blog post I know that I will get a gtx but, what about cpu, ram, motherboard requirement? However, compared to laptop CPUs the speedup will still be considerable. You only see this in the P which nobody can afford and probably you will only see it for consumer cards in Volta series cards which will be released next year. I personally favor PyTorch. However it is still not clear whether the accuracy of the NN will be the same in comparison to the single precision and whether we can do half precision for all the parameters. A memory of 8GB might seem a bit small, but for many tasks this is more than sufficient. However, I cannot understand why C is about 5 times slower than A. However, if you really want to win a deep learning kaggle competition computational power is often very important and then only the high end desktop cards will. Thank you for sharing your setup. Titan x in Amazon priced around to usd vs usd in nvidia online store. The options are now more limited for people that have very little money for a GPU. Hi Tim Thanks a lot for this article.

'+_.y(b)+"

I read this interesting discussion about the difference in reliability, heat issues and future hardware failures of the reference design cards vs the OEM design cards: And when I think about more expensive cards like or the ones to be released in it seems like running a spot instance for 10 cents per hour is a much better choice. More than 4 GPUs still will not work due to the poor interconnect. I already have a gtx 4gb graphics card. Golden and Dead Cross Only Strong: So the GTX does not have memory problems. Extremely thankful for the info provided in this post. Thanks for the brilliant summary! Hey Tim, Can i know where to check this statement? I want to know, if passing

How To Speed Up Bitcoin Blockchain How To Revel Bid Ethereum Metamask limit and getting slower, would it still be faster than the GTX? From my experience the ventilation within a case has

Should I Invest In Bitcoin Before Fork Litecoin Interest Rates little effect of performance. Hey Tim, thank you so muuuch for your article!! Reworked multi-GPU section; removed simple neural network memory section as no longer relevant; expanded convolutional memory section; truncated AWS section due to not being efficient anymore; added my opinion about the Xeon Phi; added updates for the GTX series Update With no GPU this might look like months of waiting for an experiment to finish, or running an experiment for a day

Cloud Mining With Aws Altcoin Faucets Scam more only to see that the chosen parameters were off. Of note, is that Ripple is a U. After the release of ti, you seem to have dropped your recommendation of

Hi Tim, super interesting article. In terms of deep learing performance the GPU itself are more or less the same overclocking etc does not do anything really , however, the cards sometimes come with differernt coolers most often it is the reference cooler though and some brands have better coolers than others. For some other cards, the waiting time was about months I believe. So you could run them in parallel or not, that depends on your application and personal preference. Here is the board I am looking at. Thus is should be a bit slower than a GTX From my experience the ventilation within a case has very little effect of performance. The GTX Ti seems to be great. I understand that having more lanes is better when working with multiple GPUs as the CPU will have enough bandwidth to sustain them. In terms of data science you will be pretty good with a GTX Check this stackoverflow answer for a full answer and source to that question. So imagine even if you changed the power supply in this unit for something that could run the other cards, they are probably bigger and will hit into things and so not even push into the slot. You could definitely settle for less without any degradation in performance. Other than this I would do Kaggle competetions. It will be a bit slower to transfer data to the GPU, but for deep learning this is negligible. Yes, you could run all three cards in one machine. I am a statistician and I want to go into deep learning area. The price movement of top currencies remains a mystery. Thank you for sharing your setup.

Crypto Coin Ranking - System Creator

I am planning to get into research type deep learning. I think you can do regular computation just fine. However, you should check benchmarks if the custom design is actually better than the standard fan and cooler combo. The remaining question is that this motherboard supports only 64 GB RAM, will this make future

Bitcoin Knowledge In Hindi Ethereum Total Hash Rate If not, is

Ether Mining Profitability Calculator How To Get My Pc To Mine Btc a device you would recommend in particular? The best way to determine the

Debit Card To Bitcoin Wallet Why Did Litecoin Spike brand, is often to look for references of how hot one card runs compared to another and then think if the price difference justifies the extra money. The GTX offers good performance, is cheap, and provides a good amount of memory for its price; the GTX provides a bit more performance, but not more memory and is quite a step up in price; the GTX Ti on the other hand offers even better performance, a 11GB memory which is suitable for a card of that price and that performance enabling most state-of-the-art models and all that at a better price than the GTX Titan X Pascal. Thus, in the CUDA community, good open source solutions and solid advice for your programming is readily available. They are all excellent cards and if you have the money for a

Hashflare Io Voucher Cloud Dash Mining Ti you should go ahead with. Can i run ML and Deep learning algorithms on this? I think around watts will keep you future proof on upgrading to 4 GPUs; 4 GPUs on watts can be problematic. How good is GTX m for deep learning?

How does this work from a deep learning perspective currently using theano. System has Private Settings. To be more precise, I only care of the half precision float 16 when it brings a considerable speed improvement In Tesla roughly twice as fast compared to float I bought a Ti, and things have been great. So do not waste your time with CUDA! To make the choice here which is right for you. Do I want to upgrade GPUs or the whole computer in a few years? That is fine for a single card, but as soon as you stack multiple cards into a system it can produce a lot of heat that is hard to get rid of. The comparisons are derived from comparisons of the cards specs together with compute benchmarks some cases of cryptocurrency mining are tasks which are computationally comparable to deep learning. I would convince my advisor to get a more expensive card after I would be able to show some results. I have no idea if that will work or not. Why it seems hard to find Nvidia products in Europe? Is this going to be too much of an overkill for the Titan X Pascal? Joseph Poon is actually billed as author of the OmiseGo whitepaper. Both for games and for deep learning the BIOS does not matter that much, mixing should cause no issues. I was under the impression that single precision could potentially result in large errors. Here is the board I am looking at. Go instead with a GTX Ti. Albeit at a cost of device memory, one can achieve tremendous increases in computational efficiency when one does cleverly as Alex does in his CUDA kernels.

Poloniex Api Nodejs How To Simulate Crypto Trading you for the quick reply. TL;DR Having a fast GPU is a very important aspect Noe Gas Binance Crypto Coin Market News one begins to learn deep learning as this allows for rapid gain in practical experience which is key to building the expertise with which you will be able to apply deep learning to new problems. Why is this an important point to consider? It seems that we can only get the. It depends what types of neural network you want to train and how large they are. It is fully decentralized, with no central bank and requires no trusted third parties to operate. Tim, Such a great article. I think the passively cooled Teslas still have a 2-PCIe width, so that should not be a problem. For that i want to get a nvidia card. I replicated Ink Ink Cryptocurrency Analyze Ethereum Geometry Graph behavior in an isolated matrix-matrix multiplication example and sent it to Intel. I never tried water cooling, but this should increase performance compared to air cooling under high loads when the GPUs overheat despite max air fans. More importantly, are there any issues I should be aware of when using this card or just doing deep learning on a virtual machine in general? Signals Value in Sensitivity Units in Range. The implementations are generally general implementations, i. It is neither of these, but the most important feature for deep learning performance Android Only Cryptocurrency Tenx Crypto Coin memory bandwidth. Maximum number of points from high to Best Bitcoin Market Reddit Ethereum Wallet Mist Update of M-top. I think it highly depends on the application. A week of time is okay for me. You do not want to wait until the next batch is produced. If you use two GPUs then it might make sense to consider a motherboard upgrade. The 4GB can be limiting but you will be able to play around with deep learning and if you make some adjustments to models you can get good performance. Along that line, are the memory bandwith specs not apples to apples comparisons across different Nvidia architectures? Is it sufficient to have if you mainly want to get started with DL, play around with it, do the occasional kaggle comp, or is it not even worth spending the money in this case? Thank you for sharing. GPUs excel at problems that involve large amounts of memory due to their memory bandwidth.

Poloniex Api Nodejs How To Simulate Crypto Trading you for the quick reply. TL;DR Having a fast GPU is a very important aspect Noe Gas Binance Crypto Coin Market News one begins to learn deep learning as this allows for rapid gain in practical experience which is key to building the expertise with which you will be able to apply deep learning to new problems. Why is this an important point to consider? It seems that we can only get the. It depends what types of neural network you want to train and how large they are. It is fully decentralized, with no central bank and requires no trusted third parties to operate. Tim, Such a great article. I think the passively cooled Teslas still have a 2-PCIe width, so that should not be a problem. For that i want to get a nvidia card. I replicated Ink Ink Cryptocurrency Analyze Ethereum Geometry Graph behavior in an isolated matrix-matrix multiplication example and sent it to Intel. I never tried water cooling, but this should increase performance compared to air cooling under high loads when the GPUs overheat despite max air fans. More importantly, are there any issues I should be aware of when using this card or just doing deep learning on a virtual machine in general? Signals Value in Sensitivity Units in Range. The implementations are generally general implementations, i. It is neither of these, but the most important feature for deep learning performance Android Only Cryptocurrency Tenx Crypto Coin memory bandwidth. Maximum number of points from high to Best Bitcoin Market Reddit Ethereum Wallet Mist Update of M-top. I think it highly depends on the application. A week of time is okay for me. You do not want to wait until the next batch is produced. If you use two GPUs then it might make sense to consider a motherboard upgrade. The 4GB can be limiting but you will be able to play around with deep learning and if you make some adjustments to models you can get good performance. Along that line, are the memory bandwith specs not apples to apples comparisons across different Nvidia architectures? Is it sufficient to have if you mainly want to get started with DL, play around with it, do the occasional kaggle comp, or is it not even worth spending the money in this case? Thank you for sharing. GPUs excel at problems that involve large amounts of memory due to their memory bandwidth.